This was long time in my waiting list, "How do I track usage/cost of my cloud resources?" A very common question that I hear a lot. Let me walk through the options I know:

1 I can use cost analysis tool. It is very handy for visualizations, I can add filters, change grouping dimensions. I can have daily and monthly reports including forecast for the interested period. I can also save my reports, download report output in different formats. I can even schedule reports and it will be created under a bucket. Down sides are: it is built for visual consumption, extracting data is manual, I can't go beyond one year.

2 I can use cost and usage reports. They are csv files generated every six hours in an Oracle owned bucket but can be accessed with some cross tenancy configuration. These files are retained for one year. See official documentation for details. I need to find other tools to import and analyze data in csv files. Here is a really good example for this purpose.

3 I can also use the REST API to get a Usage Summary. Similar is also available in cli. Depending on the requirement this might be a good fit, but most of the time it is too much for most of the customers.

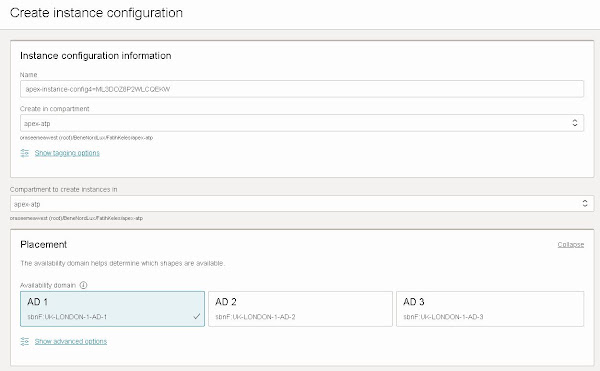

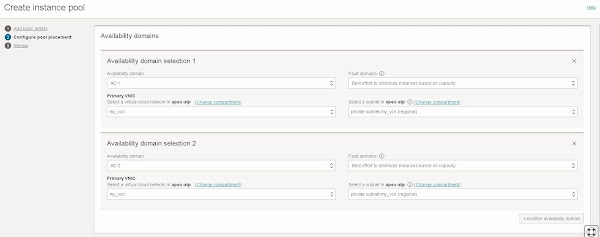

4 I can configure an Autonomous Database and use it to Track Oracle Cloud Infrastructure Resources, Cost and Usage Reports with Autonomous Database Views . I am going to focus on this because with minimal effort it can deliver great value. I can query data with simple sql, integrate it into any reporting or monitoring tool and I can go beyond one year limit by storing csv report data inside my database. Here I will list steps and some sample scripts:

a There are some prerequsite steps that need to be completed. You can check official documentation or my other blog post where I discuss resource principals to access OCI resources.

Note:The resource principal token is cached for two hours. Therefore, if you change the policy or the dynamic group, you have to wait for two hours to see the effect of your changes. This note is from documentation .

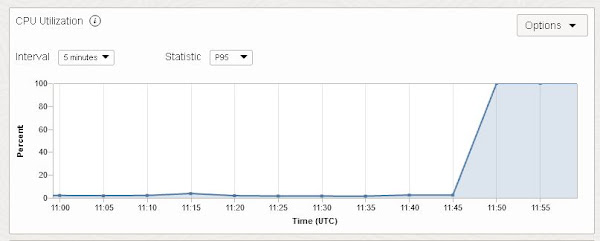

b Once the policies and resource principals are in place, I can use resourse views. There are multiple views but I am interested in two of them: OCI_COST_DATA and OCI_USAGE_DATA. I didn't check the source but I am guessing PIPELINED functions and/or external tables are involved. I could just use the views but there are two problems I need to solve: running query on the view is slow, and underlying data is changing, data older than one year will vanish. For this reason I am going to create a materialized view based on an existing table. This temporary table will be refreshed every 6 hours and in an another table I will be accumulating all the data. I am creating primary keys to detect the difference for each run.

c I am creating a procedure which will refresh the temp table with latest data, merge new rows into actual table, then schedule the next run.

d I just need to execute the procedure once, then the ball will be rolling on its own.

e DBMS_SCHEDULER job details can be tracked with the following views.

f Finally I will have all the data accumulated in my table, even long after the csv usage/cost files are deleted. Here are some SQL queries to start with.