When I saw Tim Hall's blog post about #JoelKallmanDay it touched my heart. If you are somehow interested with Oracle APEX you probably know who Joel Kallman is. He meant a lot to the community. He is missed by the people all around the world, by the people he didn’t meet face to face. I wish this blog post was about APEX, maybe next year...

This one was waiting in my stash for long time as I wasn't happy about the dirty POC code and didn't have the time to refactor it. Actually it is about a really niche and cool requirement and second part of something I've posted in the past .

So let me start with a little context. Can you imagine how the big e-commerce platforms get ready for their peak seasons? This is about a software house who is highly specialized in load testing e-commerce applications. They have their own platform where e-commerce users prepare their test scenarios, and launch hundreds of thousands individual web agents to test the application for like 30 minutes. Under the hood the actual testing platform provisions tens (or hundreds) of compute instances, deploy the test code and runs it. Once the desired testing duration ends, all the compute instances are terminated. Perfect use-case that's possible only on cloud! This was one of the cool use-cases I've seen. Although the application is polyglot, they have chosen Java to code the instance creation part. So here we start.

My starting point is as usual OCI Online Documentation SDK for Java. The documents have links to Maven repository where I can just include the dependencies in my POM file . And there is an Oracle GitHub repository with quick start, installation and examples that will get me started in minutes. I've quickly located example code for creating a compute instance. The sample code is huge, it is creating everything from scratch, not only the compute instance but also VCN, subnet, gateways, etc. It is a comprehensive example, kudos to the team.

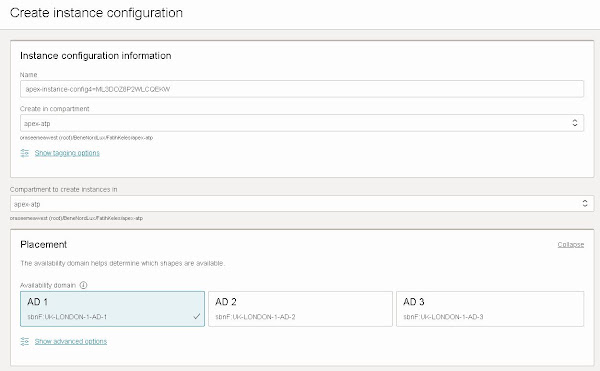

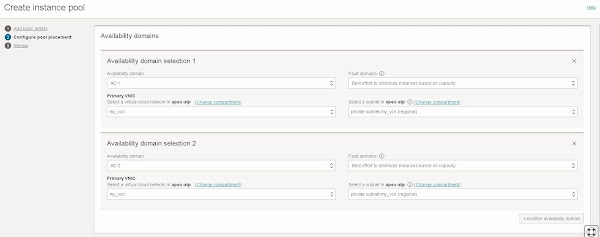

1I need a very quick test on how fast I can create instances. So here is a simplified test code which is getting all required inputs from environment variables (region, AD, subnet, compartment, image, and shape identifiers already that already exist), original sample is using waiters so I keep it just to see how convenient to wait my instances to reach a certain state (running)

And if I just test it with 5 instances to be created, the output is:

-----------------------------------------------------------------------

ocid1.instance.oc1.uk-london-1.... created in 36244 ms

ocid1.instance.oc1.uk-london-1.... created in 32845 ms

ocid1.instance.oc1.uk-london-1.... created in 32102 ms

ocid1.instance.oc1.uk-london-1.... created in 31995 ms

ocid1.instance.oc1.uk-london-1.... created in 62075 ms

Total execution time in seconds: 196

-----------------------------------------------------------------------

I am provisioning instances one by one and waiting for the instance to transition into RUNNING state. It took around ~30 seconds to provision a compute instance and see it in running state. Not bad at all. But this is not good enough, for extreme cases my customer needs tens of instances, can we do better?

2So I think I don't need to wait for the compute instance to reach running state before provisioning the other one, as long as I have the OCIDs of instances, I can come back to check the state later.

This time since expecting to wait less, I test it with 10 instances. Here is the output:

-----------------------------------------------------------------------

ocid1.instance.oc1.uk-london-1.... created in 2427 ms

ocid1.instance.oc1.uk-london-1.... created in 878 ms

ocid1.instance.oc1.uk-london-1.... created in 1041 ms

ocid1.instance.oc1.uk-london-1.... created in 982 ms

ocid1.instance.oc1.uk-london-1.... created in 971 ms

ocid1.instance.oc1.uk-london-1.... created in 772 ms

ocid1.instance.oc1.uk-london-1.... created in 743 ms

ocid1.instance.oc1.uk-london-1.... created in 754 ms

ocid1.instance.oc1.uk-london-1.... created in 972 ms

ocid1.instance.oc1.uk-london-1.... created in 812 ms

Total execution time in seconds: 12

-----------------------------------------------------------------------

This is a lot better, it is down to ~1 second per instance from 30 seconds per instance. I wonder if this can get any better. It is still synchronous call, one by one.

3What happens if we make it asynchronous? For this purpose I am using AsyncHandler which enables you with callback functions. Compute client also takes a different form: ComputeAsyncClient, input is the same. I do some concurrent processing with Futures , just to see if threads are done and collect the compute instance OCIDs

I again test it with 10 instances. Here is the output:

-----------------------------------------------------------------------

work requested in 391 ms

work requested in 14 ms

work requested in 10 ms

work requested in 9 ms

work requested in 7 ms

work requested in 7 ms

work requested in 5 ms

work requested in 6 ms

work requested in 7 ms

work requested in 4 ms

test-9 - ocid1.instance.oc1.uk-london-1....

test-10 - ocid1.instance.oc1.uk-london-1....

test-1 - ocid1.instance.oc1.uk-london-1....

test-4 - ocid1.instance.oc1.uk-london-1....

test-5 - ocid1.instance.oc1.uk-london-1....

test-6 - ocid1.instance.oc1.uk-london-1....

test-7 - ocid1.instance.oc1.uk-london-1....

test-8 - ocid1.instance.oc1.uk-london-1....

test-3 - ocid1.instance.oc1.uk-london-1....

test-2 - ocid1.instance.oc1.uk-london-1....

Total execution time in seconds: 2

-----------------------------------------------------------------------

As you can see from the output, there is no order because it is asynchronous and randomly created depending on thread execution order. It is blazing fast, took 2 seconds in total to create 10 instances!

Notes

What if I get greedy and try a larger batch? Then I get an error message because of request throttling protection.

Here is a little script to clean-up that can be used during tests.

References:

1. OCI Documentation: SDK for Java

2. Oracle GitHub Repository: SDK for Java

3. Oracle GitHub Repository: CreateInstanceExample.java

4. Tutorial: java.util.concurrent.Future

5. OCI Documentation: Request Throttling

6. OCI Documentation: Finding Instances