Hey everyone,

I recently shared my latest side project with you all here . It's a simple web page where I've been experimenting with various tools, and my latest addition focuses on AI. Lately, I've been diving deep into the realms of AI, machine learning, and data science, exploring the tools and libraries that are making waves in the tech world. Like many, I'm captivated by the possibilities offered by transformers and LLMs, and I'm eager to integrate them into real-world applications to tackle previously unsolvable problems. We have new tools now, let's see what has changed.

Of course, mastering these technologies doesn't happen overnight. I'm dedicating time to different projects, using them as opportunities to learn, experiment, and refine my skills. In my last blog post , I discussed automating invoice entry, or perhaps "assisting" is a better term. The goal was to extract values from documents and streamline data entry, ultimately making life a little easier and saving time.

This time around, I'm delving into LLMs with a focus on summarization. I've developed and deployed a tool capable of summarizing content from YouTube videos, PDF files, or any web page. The concept is straightforward: extract the text and condense it down. To streamline the process, I'm leveraging 🦜️🔗 LangChain under the hood, which simplifies the task. Here's a glimpse into some of the tools and APIs I've utilized:

1 For YouTube transcript extraction, I've tapped into the youtube-transcript-api , allowing users to download transcripts if available. Transcript can be uploaded by the user or auto-generated, and it can be in multiple languages, and it can be translated to other langueages as well. All this can happen on the fly. Transcribing the auido/video yourself is also an option, there are a lot of models trained for this purpose paid like Oracle AI Speech and open source like Whisper from OpenAI, and many more...

2 Processing PDF documents has been an enjoyable challenge. I was already experimenting extensively with PyMuPDF and now PyPDFLoader . One thing I like about python world is there are hundreds of libraries out there, you can find a huge list of document loaders here .

3 Web page processing required leveraging AsyncHtmlLoader and Html2TextTransformer and beautiful soup to sift through the HTML and focus solely on the textual content.

4 Summarization duties are handled by LLMs (such as OpenAI and Cohere), albeit with a limited context window. If the text fits into LLM context it is great, if not then to work around this, I've implemented a technique called "MapReduce ," chunking the text into manageable pieces for summarization, then condensing those summaries further. This might not be required at all in the near future, competition is tough and everyday we witness increasing model parameters and context windows .

Image taken from geeksforgeeks.org

Image taken from geeksforgeeks.org 5 I've added a few user-friendly features, such as automatically fetching and displaying thumbnails for pasted links. Whether it's a YouTube video, PDF file, or web page, users will see a visual preview to enhance their experience. YouTube offers thumnails if you know the video_id, which part of of the URL. For PDF files I've created a thumbnail image of the first page. Web pages were a bit tricky, I used headless chromium to get screenshot of the page. This Dockerfile was extremely helpful to achieve this.

6 To keep users engaged while awaiting results, I've enabled streaming via web sockets using socket.io for both console logs and chain responses. Although it does come with a cost, it is highly motivating for user to keep waiting as witnessing things happen under the hood. And with ChatGPT, it kind of became the defacto.

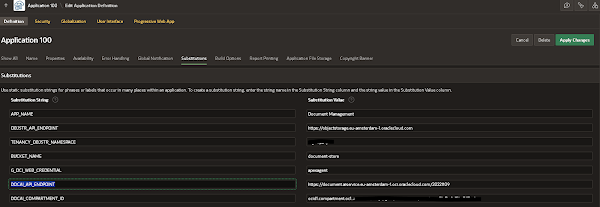

7 And finally everything is nicely packed in a container and deployed on Oracle Cloud behind load balancers secured with TLS/SSL and CloudFlare etc. You can read about the setup here .

As always, I've shared all the code on my GitHub repository , along with the references that aided me at the bottom of this post. I'll also be putting together a quick demo to showcase these features in action. While these concepts may seem generic, practice truly does refine them. Implementing these tools has not only boosted my confidence but also honed my skills for future challanges.

Conclusion

I hope this project serves as inspiration for readers, sparking ideas for solving their own challenges. Perhaps it could fit into any requirement that needs simplifying the review and categorization of lengthy text. Documents like Market Research Reports, Legal Documents, Financial Reports, Training and Educational Materials, Meeting Minutes and Transcripts, Content Curation for your Social Media and even Competitive Intelligence Gathering...

All we need is a little creativity, and courage to tackle our old unsolvable problems with our new tools. Langchain itself is using this to improve documentation quality combined with clustering/classification logic.

If you have any ideas or questions, feel free to discuss them in the comments. Don't hesitate to reach out; together, we might just find the solution you're looking for.

Happy coding!

References:

1.Developing Apps with GPT-4 and ChatGPT Most of the foundation and ideas came from this book, highly recommended for beginners. It is available on O'Reilly platform

2.🦜️🔗 LangChain You will find almost all you need, getting started guide, sample codes for tools, agents, llms, loaders ...

3.PyMuPDF PDF Library

4.MapReduceDocumentsChain

5.Document Loaders

6.html2image for web page thumbnails

7.Socket.io Web Sockets

8.I, Robot for PDF testing

9.Streaming for LangChain Agents Video Tutorial by James Briggs

10.YouTube Transcript API